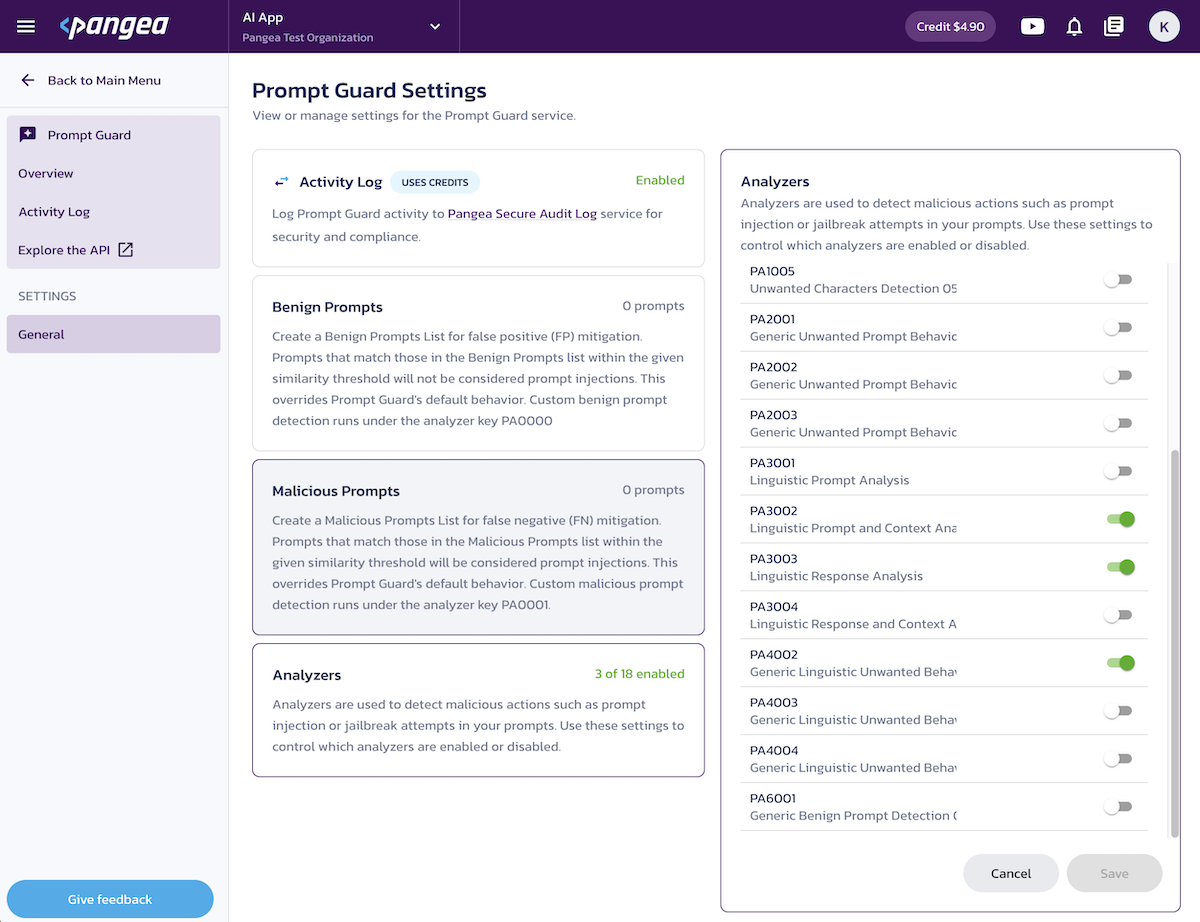

Prompt Guard Settings

On the Prompt Guard Settings page in your

Pangea User Console , you can adjust the service configuration for optimal effectiveness and performance, as well as control the enablement of its Activity Log.

Activity Log

The Activity Log for Prompt Guard captures the details of service calls. You can view a summary of Prompt Guard activity on its Overview page and access individual logs on the Activity Log page in your Pangea User Console.

Learn more about using this feature on the Activity Log documentation page.

Configuration Options

The Activity Log settings integrate with the Secure Audit Log service to enable attribution and accountability in your AI application. This integration is enabled by default and uses the AI Activity Audit Log Schema, which is purpose-built to capture AI application activity.

Configuration options:

- Enable Log Prompt Guard Activities (default)

- Disable Log Prompt Guard Activities

In the Activity Log panel under AI Guard General settings, you can view the name and ID of the schema used to record key details of each service call, including timestamps, inputs, outputs, detections, and contextual information.

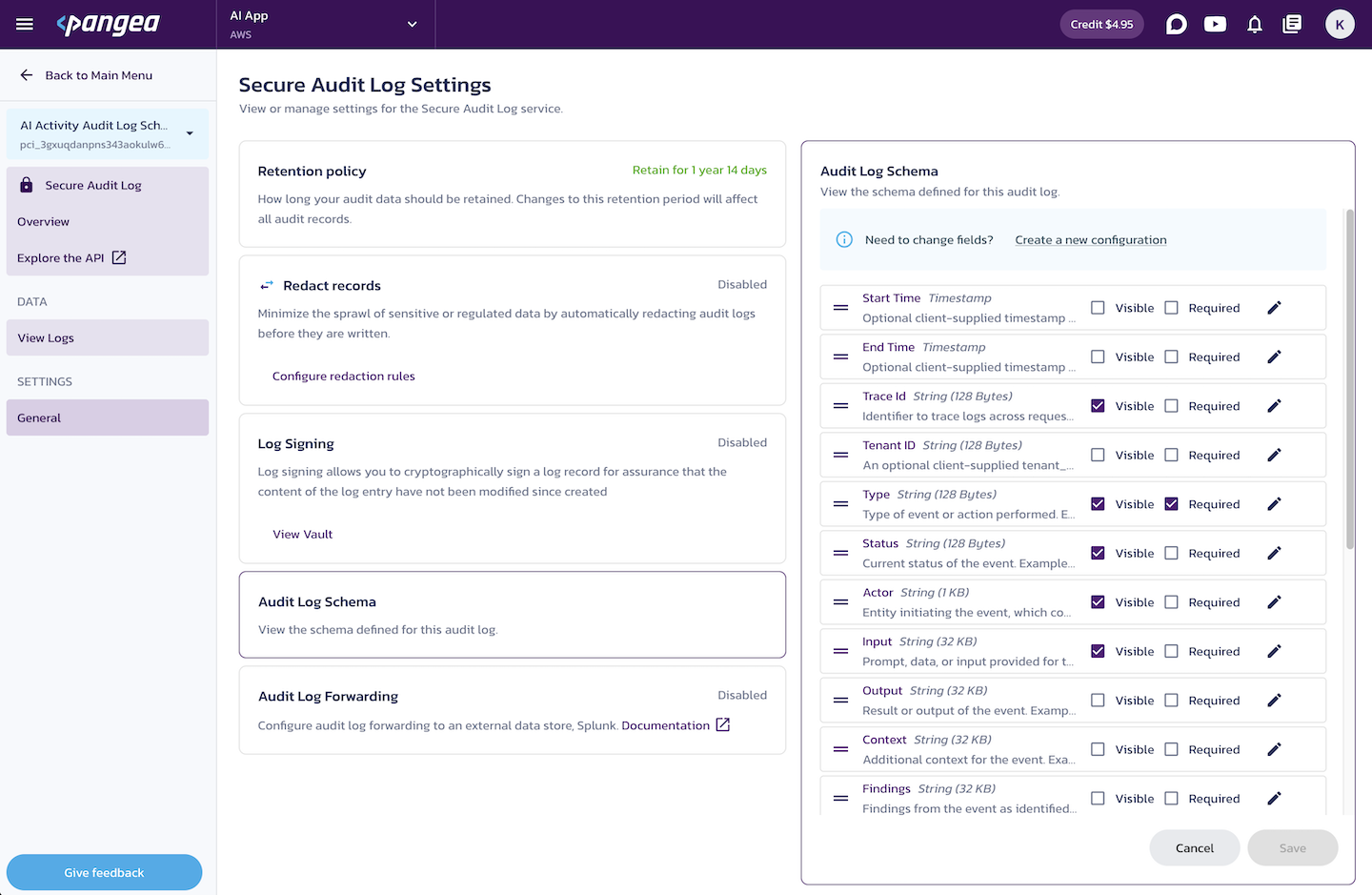

Audit Log Schema

View and configure the schema fields

You can view and configure the visibility of fields in your Prompt Guard audit schema, as well as set whether they are required on the Secure Audit Dialog configuration pages:

- In your Pangea User Console , click on the Secure Audit Log service name. If the service is not active, follow the wizard and accept the default settings to enable it.

- Select your AI Activity Audit Log Schema from the top left in the service sidebar.

- Click General in the sidebar.

- Under Secure Audit Log Settings, click the Audit Log Schema tile.

- In the dialog on the right, you can view the schema fields, update their visibility, and change whether they are required.

- Click Save to apply your changes.

Use the Schema in Your Application

Optionally, you can use this schema to capture additional details in your AI application. This allows you to view your application-specific events alongside the service activity in the Prompt Guard Activity Log, with a summary on the service's Overview page.

To send log data to Secure Audit Log from your application code:

- Click the Overview link in the Secure Audit Log sidebar.

- On the Secure Audit Log Overview page, in the Configuration Details section, obtain the necessary credentials to make an API call to Secure Audit Log:

- Your AI Activity Audit Log Schema Config ID (ensure the schema is selected in the top left sidebar).

- The project Domain.

- The Default Token to authorize your application requests to the Secure Audit Log service APIs.

Learn more about configuring Secure Audit Log in its documentation.

Benign Prompts

This setting helps mitigate false positives (FP). If innocent prompts are incorrectly flagged as malicious, you can add them to the Benign Prompts list. The setting also allows you to configure a similarity threshold. When Prompt Guard processes a new prompt, it compares it against the stored Benign Prompts. If a match is found within the threshold, the prompt is considered benign, and Prompt Guard will return that verdict.

Configuration options:

- Enable - Requires the Benign Prompts list to be populated.

- Disable (default).

- Adjust Similarity Threshold.

- Add or delete benign prompt examples:

- Use the + Prompt button to add a new prompt.

- Use the - button in the Prompts list to delete.

- Use the Save button to apply your changes.

Malicious Prompts

This setting helps mitigate false negatives (FN). If malicious prompts are incorrectly flagged as benign, you can add them to the Malicious Prompts list. The setting also allows you to configure a similarity threshold. When Prompt Guard processes a new prompt, it compares it against the stored Malicious Prompts. If a match is found within the threshold, the prompt is considered malicious, and Prompt Guard will return that verdict.

Configuration options:

- Enable - Requires the Malicious Prompts list to be populated.

- Disable (default).

- Adjust Similarity Threshold.

- Add or delete malicious prompt examples:

- Use the + Prompt button to add a new prompt.

- Use the - button in the Prompts list to delete.

- Use the Save button to apply your changes.

Analyzers

Prompt Guard includes a collection of analyzers used to determine whether a given prompt is malicious or benign. The list of analyzers may evolve as we continue to improve Prompt Guard's capabilities.

The Analyzers configuration page allows you to enable or disable any analyzers available in Prompt Guard. By default, several analyzers are enabled, offering an optimized configuration for both security and performance.

The following categories of analyzers are provided, grouped by their underlying approach:

- PA100x - These analyzers use heuristic methods to spot common attack patterns, such as the notorious "DAN" jailbreak prompts.

- PA200x - Built with classifiers using techniques like neural networks and SVMs, these analyzers evaluate prompts for generic unwanted behaviors.

- PA300x - Leveraging cloud LLMs, these analyzers check for prompt injection using multiple analysis methods, benefiting from the scalability and diverse perspectives of cloud-based models.

- PA400x - These analyzers employ fine-tuned models or small LLMs trained on a ground truth dataset, offering refined detection capabilities tailored to specific patterns.

- PA600x - These analyzers are dedicated to generic benign prompt detection, ensuring that known safe patterns are correctly identified and not falsely flagged as malicious.

Was this article helpful?