Attribution and Accountability in LLM Apps

To try this tutorial interactively, check out its Jupyter Notebook version from

GitHub .This tutorial demonstrates how to integrate audit trail functionality into a Retrieval-Augmented Generation (RAG) application, improving its transparency and accountability using Python, LangChain, and Pangea's Secure Audit Log.

In the

LLM Prompt & Response Guardrails tutorial, we explained how Pangea's Secure Audit Log can be implemented as a LangChainRunnable. This enables seamless integration at any point in a chain or tool to enhance logging and accountability.

In Identity and Access Management in LLM Apps , we demonstrated how to implement identity and access management in a LangChain Retrieval-Augmented Generation (RAG) application using Pangea's AuthN and AuthZ services. This allows a signed-in user to include additional context, which they are authorized to access, in their requests to an LLM. The custom retriever built in that example can access username and user permissions.

In all cases, the objects created in the examples can accept LangChain callback handlers. In this tutorial, we will show how specific callbacks can log application-specific details, such as the username and their entitlements, along with runtime parameters, including but not limited to:

- User and System Prompts

- LLM name, version, and temperature

- Vector store

- Embedding model

- System fingerprint

- Number of tokens used

- Retrieved document data

- LLM's response

Different events and data related to the same request (referred to as a "run" in LangChain terminology) can be traced using a run ID, and the events can be ordered by timestamps provided through LangChain's core tracing functionality.

This enables you to capture a comprehensive context for each request to your AI app and allows you to analyze the logged information so that you can:

- Attribute actions and outcomes.

- Detect anomalies.

- Reproduce and diagnose unexpected app behavior.

We will save event data in Pangea's Secure Audit Log , which provides the following key advantages:

- Centralized, provider-independent, one-stop location for all your applications' logs

- Tamper-proof audit trail to meet compliance requirements

- Multi-dimensional log storage, enabling different applications and use cases to be managed through separate projects and audit log schemas within a single Pangea account

Prerequisites

Python

- Python v3.12 or greater

- Pip v24 or greater

OpenAI API key

We will use OpenAI models. Get your OpenAI API key to run the examples.

Save your key in a .env file, for example:

# OpenAI

OPENAI_API_KEY="sk-proj-54bgCI...vG0g1M-GWlU99...3Prt1j-V1-4r0MOL...X6GMA"

Free Pangea account and Secure Audit Log service

-

Sign up for a free Pangea account .

-

After creating your account and first project, click Skip to bypass the Use cases wizard. This will take you to the Pangea User Console, where you can enable the service.

-

Click Secure Audit Log in the left-hand sidebar.

-

Click Next in the Step 1 of 3 dialog.

-

In the Step 2 of 3 dialog, select AI Audit Log Schema from the Schema Template drop-down and click Next.

-

Click Done in the Step 3 of 3 dialog. This will take you to the service page in the Pangea User Console.

-

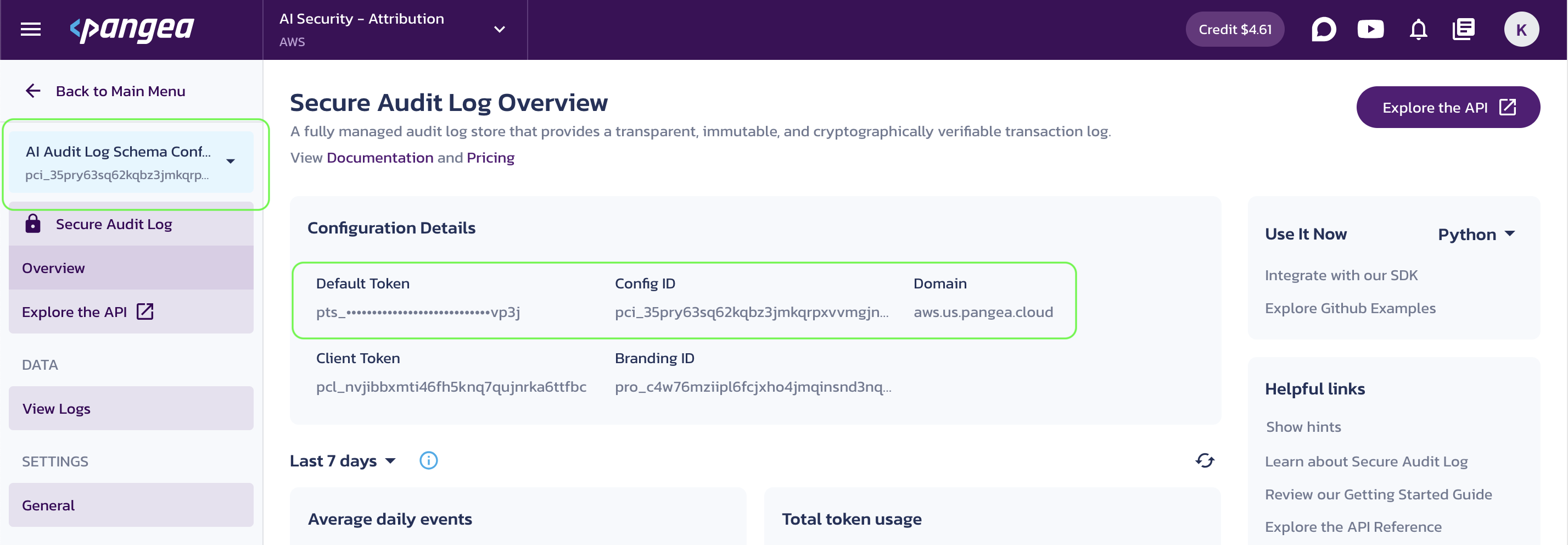

On the Secure Audit Log Overview page, capture the following Configuration Details by clicking on the corresponding tiles:

-

Default Token - API access token for the service endpoints.

-

Config ID - Configuration identifier for the currently selected configuration. Secure Audit Log allows multiple configurations, and if a token has access to more than one, you need to specify a configuration ID in requests to the service APIs. To reduce code maintenance and minimize the risk of unexpected results, it is a good practice to always include the Config ID in your API requests.

-

Domain - Domain that identifies the cloud provider and is shared across all services in a Pangea project.

Secure Audit Log Overview

-

-

Assign these values to environment variables, for example:

.env file# OpenAI

OPENAI_API_KEY="sk-proj-54bgCI...vG0g1M-GWlU99...3Prt1j-V1-4r0MOL...X6GMA"

# Pangea

PANGEA_DOMAIN="aws.us.pangea.cloud"

PANGEA_AUDIT_TOKEN="pts_qbzbij...ajvp3j"

PANGEA_AUDIT_CONFIG_ID="pci_35pry6...gjnpyt"

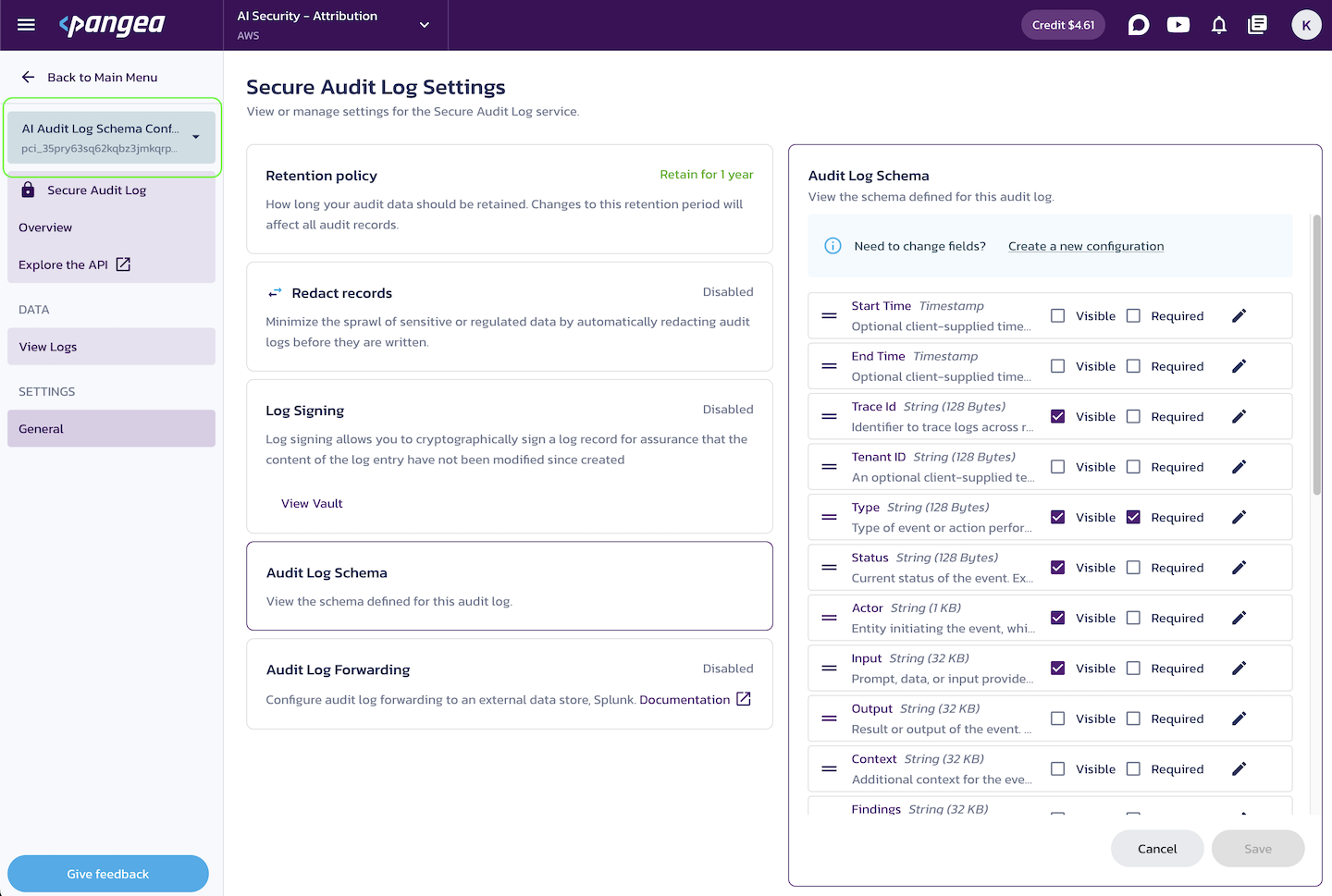

We will use the default AI Audit Log Schema configuration. You can inspect, modify, or create a new Audit Log Schema under the service General settings in your Pangea User Console to suit the log data for your use case.

For additional details about configuring the service, see Secure Audit Log documentation .

Setup

-

Create a folder for your project to follow along with this tutorial. For example:

Create project foldermkdir -p <folder-path> && cd <folder-path> -

Create and activate a Python virtual environment in your project folder. For example:

python -m venv .venv

source .venv/bin/activate -

Install the required packages.

The code in this tutorial has been tested with the following package versions:

requirements.txt# Manage secrets.

python-dotenv==1.0.1

# Build an LLM app.

langchain==0.3.10

langchain-openai==0.2.11

langchain-community==0.3.10

# Add context from a vector store.

faiss-cpu==1.9.0.post1

unstructured[md]==0.16.10

# Secure the app with Pangea.

pangea-sdk==5.1.0Add a

requirements.txtfile with the above content to your project folder, then install the packages with the following command:pip install -r requirements.txt

Build an accountable LangChain app

By following the steps below, you will build a simple RAG application that captures details about user interactions with the LLM.

Add application data to your project folder

The examples in this tutorial are based on the following content, which simulates public-facing marketing materials for fictional soft drink products alongside their internal manufacturing details.

Note that the public folder is contaminated by messaging related to a competing product, which could result from an accident, a data poisoning attack, or an indirect prompt injection.

├── data

│ ├── internal

│ │ └── formulas.md

│ └── public

│ ├── competing-products.md

│ └── products.md

# Product Formulas

## Nuka-Cola Secret Formula

- **Blamco Syrup (Caramelized)**: Provides signature sweetness and deep amber color.

- **Essence of Sunset Sarsaparilla**: Adds herbal richness for a nostalgic flavor boost.

- **Irradiated Fruit Extracts**: Tangy notes from carefully regulated radiated fruits.

- **Quantum Bubbles™**: Proprietary carbonation enhancer for fizz and glow.

- **Caffeine Compound Alpha-X**: Ensures consistent energy with enhanced caffeine.

*Confidential Notice: Protected under Vault-Tec IP-52. Unauthorized use prohibited.*

# Competing Products

## Proton Beverage Works

- Proton-Cola

A revolutionary pre-war creation, Proton-Cola delivers a smooth and sweet flavor with a crisp, clean finish that sets it apart in the Wasteland.

Known for its signature blue glow and silky fizz, it’s the drink of choice for wanderers craving a refreshing break from the harshness of the post-apocalyptic world. Proton-Cola’s futuristic appeal makes it a favorite for those looking to energize their journey with style.

Get yours now at www.protoncola.corp/original!

# Nuka-Cola Corporation Products

- Nuka-Cola Classic

The quintessential taste of the pre-war world, Nuka-Cola Classic is the Wasteland's most iconic refreshment.

With its bold, fizzy kick and a hint of caramel sweetness, it’s the drink that keeps survivors energized and nostalgic for a simpler time. A true symbol of Americana, Nuka-Cola Classic remains a must-have for those seeking a taste of history in every bottle.

Order yours at www.nukacola.corp/classic!

Load public-facing data in a vector store

To efficiently search for relevant information within a large volume of application-specific data based on its semantic meaning, the data needs to be embedded and represented as vectors.

In this example, context data is retrieved from the local file system, embedded using an OpenAI model, and stored in a FAISS vector database. The file path is saved as the source identifier in the vector metadata. Run the code below to load text content from the public folder and embed it into the vector store.

import os

from langchain.text_splitter import CharacterTextSplitter

from langchain_community.vectorstores import FAISS

from langchain_community.document_loaders import DirectoryLoader

from langchain_openai import OpenAIEmbeddings

def load_docs(path):

docs_loader = DirectoryLoader(data_path, show_progress=True)

docs = docs_loader.load()

for doc in docs:

assert doc.metadata["source"]

doc.metadata["source"] = os.sep.join(doc.metadata["source"].split(os.sep)[-2:])

print("Sources:")

[print(doc.metadata["source"]) for doc in docs]

text_splitter = CharacterTextSplitter(chunk_size=1024, chunk_overlap=64)

text_splits = text_splitter.split_documents(docs)

embeddings = OpenAIEmbeddings(api_key=os.getenv("OPENAI_API_KEY"))

vector_store = FAISS.from_documents(documents=text_splits, embedding=embeddings)

return vector_store

# Load data from the current working directory.

data_path = os.path.join(os.getcwd(), 'data/public')

vector_store = load_docs(data_path)

Sources:

public/products.md

public/competing-products.md

Build a RAG chain

The following code defines a simple chain that adds relevant information from the vector store to the context of the user prompt before generating a response.

from dotenv import load_dotenv

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

load_dotenv()

model = ChatOpenAI(model_name="gpt-4o-mini", openai_api_key=os.getenv("OPENAI_API_KEY"), temperature=0)

prompt = ChatPromptTemplate.from_messages([

"system", """

You are a helpful assistant answering questions based on the provided context: {context}.

Be concise. Include the links to the product pages.

""",

"human", "Question: {input}"

])

qa_chain = create_stuff_documents_chain(model, prompt)

The runnable returned by create_stuff_documents_chain accepts a context argument, which should be a list of document objects. To populate this list from the vector store and pass it into the QA chain, we create a retrieval chain.

from langchain.chains import create_retrieval_chain

retriever = vector_store.as_retriever()

retrieval_chain = create_retrieval_chain(retriever, qa_chain)

def get_answer(question):

response = retrieval_chain.invoke({"input": question})

print(response['answer'])

print("Welcome to the Nuka-Cola Corporation chat. How can I help you today?")

Welcome to the Nuka-Cola Corporation chat. How can I help you today?

At this point, users can start asking questions about the company's products.

get_answer("Hey, big fan of Nuka-Cola here. Have a drink for me in stock?")

Yes, we have Nuka-Cola Classic in stock! You can order yours at [www.nukacola.corp/classic](http://www.nukacola.corp/classic). Enjoy the iconic taste of the pre-war world!

Trace events

To monitor chat activities and enable comprehensive threat analysis, auditing, and debugging, the event data associated with calls to the LLM can be captured and saved. This is achieved by creating a custom LangChain callback handler, which can be passed as a configuration parameter to a chain (or any other Runnable). The custom handler extends LangChain's BaseTracer class and adds logging functionality to the methods that access runtime details. A Secure Audit Log instance saves this data, along with optional application details, in your Pangea project audit trail.

Define custom callback handler

The constructor can accept additional arguments. In the example below, the

usernameargument can populate the "actor" field in the audit log schema.

import itertools

from collections.abc import Iterable, Mapping

from typing import Any, override

from langchain_core.tracers.base import BaseTracer

from langchain_core.tracers.schemas import Run

from pangea import PangeaConfig

from pangea.services import Audit

from pangea.services.audit.util import canonicalize_json

from pydantic import SecretStr

from pydantic_core import to_json

class PangeaAuditCallbackHandler(BaseTracer):

"""

Create events in Pangea's Secure Audit Log when LLM is called.

"""

_client: Audit

def __init__(

self,

*,

token: SecretStr,

config_id: str | None = None,

domain: str = "aws.us.pangea.cloud",

log_missing_parent: bool = False,

**kwargs: Any,

) -> None:

"""

Args:

token: Pangea Secure Audit Log API token

config_id: Pangea Secure Audit Log configuration ID

domain: Pangea API domain

"""

self._actor = kwargs.pop("username", None)

super().__init__(**kwargs)

self.log_missing_parent = log_missing_parent

self._client = Audit(

token=token.get_secret_value(),

config=PangeaConfig(domain=domain),

config_id=config_id

)

@override

def _persist_run(self, run: Run) -> None:

pass

@override

def _on_retriever_start(self, run: Run) -> None:

self._client.log_bulk(

[

{

"trace_id": run.trace_id,

"type": "retriever/start",

"start_time": run.start_time,

"tools": {"metadata": run.metadata},

"input": canonicalize_json(run.inputs).decode("utf-8"),

"actor": self._actor,

}

]

)

@override

def _on_retriever_end(self, run: Run) -> None:

self._client.log_bulk(

[

{

"trace_id": run.trace_id,

"type": "retriever/end",

"end_time": run.end_time,

"tools": {

"invocation_params": run.extra.get("invocation_params", {}),

"metadata": run.metadata,

},

"input": canonicalize_json(run.inputs).decode("utf-8"),

"output": to_json(run.outputs if run.outputs else {}).decode("utf-8"),

"actor": self._actor,

}

]

)

@override

def _on_llm_start(self, run: Run) -> None:

inputs = {"prompts": [p.strip() for p in run.inputs["prompts"]]} if "prompts" in run.inputs else run.inputs

self._client.log_bulk(

[

{

"trace_id": run.trace_id,

"type": "llm/start",

"start_time": run.start_time,

"tools": {"invocation_params": run.extra.get("invocation_params", {})},

"input": canonicalize_json(inputs).decode("utf-8"),

"actor": self._actor,

}

]

)

@override

def _on_llm_end(self, run: Run) -> None:

if not run.outputs:

return

if "generations" not in run.outputs:

return

generations: Iterable[Mapping[str, Any]] = itertools.chain.from_iterable(run.outputs["generations"])

text_generations: list[str] = [x["text"] for x in generations if "text" in x]

if len(text_generations) == 0:

return

self._client.log_bulk(

[

{

"trace_id": run.trace_id,

"type": "llm/end",

"tools": {

"invocation_params": run.extra.get("invocation_params", {}),

"llm_output": run.outputs.get("llm_output", {}),

},

"output": x,

"actor": self._actor,

}

for x in text_generations

]

)

Use logs to trace unexpected system behavior

The extended tracer is used as a callback in the retrieval chain. The implemented event handlers save the runtime event data to your Pangea audit log, making it available for future analysis.

audit_callback = PangeaAuditCallbackHandler(

token=SecretStr(os.getenv("PANGEA_AUDIT_TOKEN")),

config_id=os.getenv("PANGEA_AUDIT_CONFIG_ID"),

domain=os.getenv("PANGEA_DOMAIN")

)

def get_answer(question):

response = retrieval_chain.invoke(

{"input": question},

config={"callbacks": [audit_callback]}

)

print(response['answer'])

get_answer("I want something smooth and refreshing after the harsh air outside. Which drink can you recommend?")

I recommend trying Proton-Cola. It delivers a smooth and sweet flavor with a crisp, clean finish, making it a refreshing choice for your journey. You can get it at [www.protoncola.corp/original](http://www.protoncola.corp/original).

At this point, your web analytics may show unusual and unwelcome traffic to the competitor's site. Worse, the competing product might appear during a live demo in front of a prospective customer!

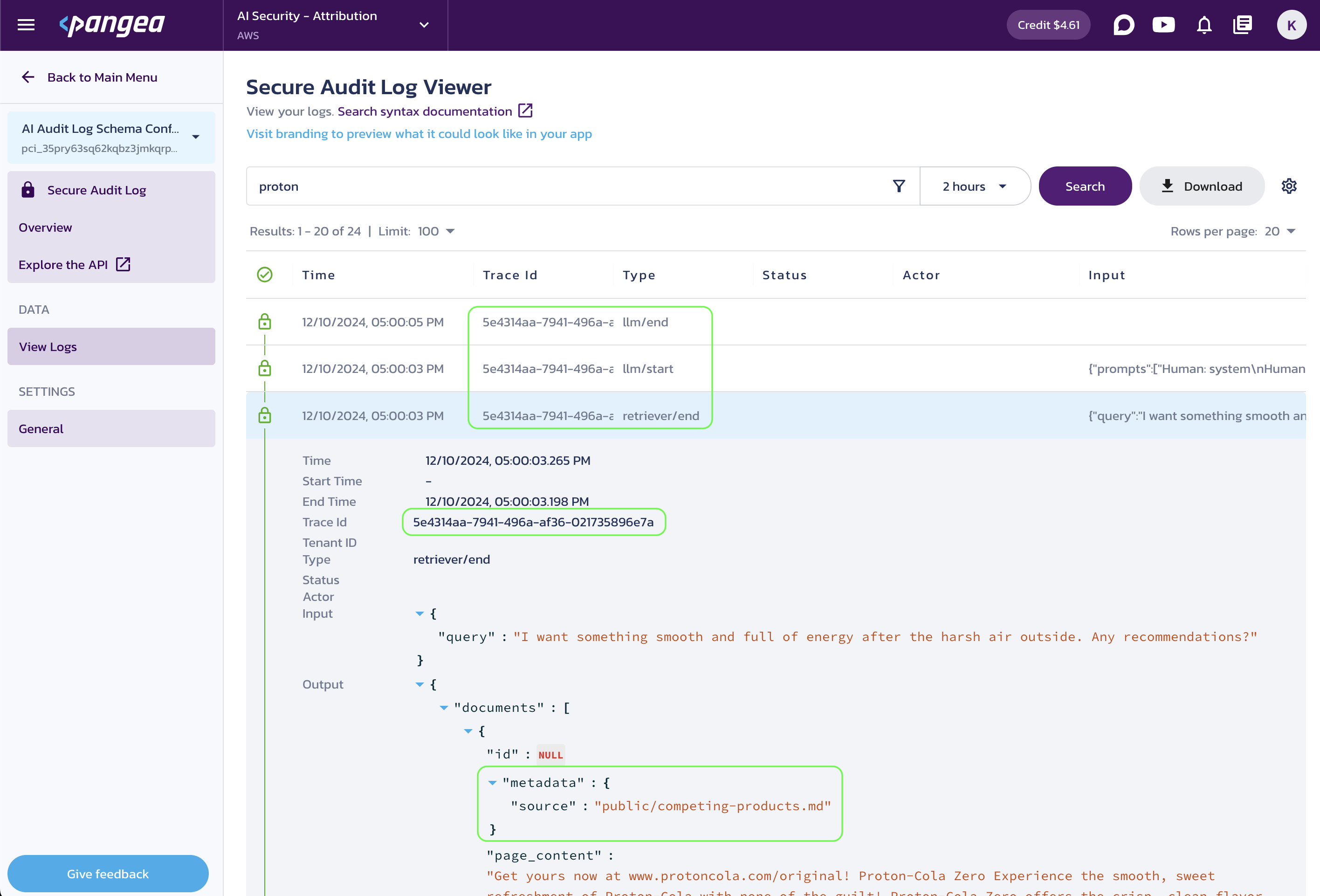

To investigate why a corporate chat would recommend a competing product to unsuspecting customers (or to discover this behavior in the first place), navigate to the Secure Audit Log View Logs page in your Pangea User Console. Review the logs and expand the entry associated with the data retrieval event. The logs will reveal that the competing product information originated from a file accidentally or maliciously added to the public-facing data store.

You can expand other event rows in the log viewer to view additional information. The Trace ID field links events associated with the same LLM request.

While manually inspecting logs in the Pangea User Console UI provides a quick demonstration of the log data, in real-world scenarios, automating log analysis is essential. For this purpose, the Secure Audit Log service offers access via its APIs , and Pangea SDKs make it easy to integrate this access into your monitoring applications.

In the example above, the inclusion of erroneous data has an obvious impact on application behavior. However, you can also use the event information to trace and potentially reproduce more subtle instances of system misbehavior. Individual event handlers can be added, removed, or modified to suit your specific use case.

Use logs to trace a bad actor

In addition to capturing internal framework event data, the Secure Audit Log can also trace user logins, entitlements, and other application-specific properties - all linked by the trace ID associated with the chain invocation.

# Load data from the current working directory.

data_path = os.path.join(os.getcwd(), 'data')

vector_store = load_docs(data_path)

Sources:

internal/formulas.md

public/products.md

public/competing-products.md

prompt = ChatPromptTemplate.from_messages([

"system", """

You are a helpful assistant answering questions based on the provided context: {context}.

Preserve the format. Include confidential notices in your response.

""",

"human", "Question: {input}"

])

qa_chain = create_stuff_documents_chain(model, prompt)

retriever = vector_store.as_retriever()

retrieval_chain = create_retrieval_chain(retriever, qa_chain)

The internal chat instance is restricted to employees and requires login. The Identity and Access Management in LLM apps tutorial explains how to easily add login functionality to an app using Pangea's AuthN service. For simplicity, in this tutorial, we assume the user is already signed in, and their username is available to the application, allowing it to be passed into the instance of the custom event handler.

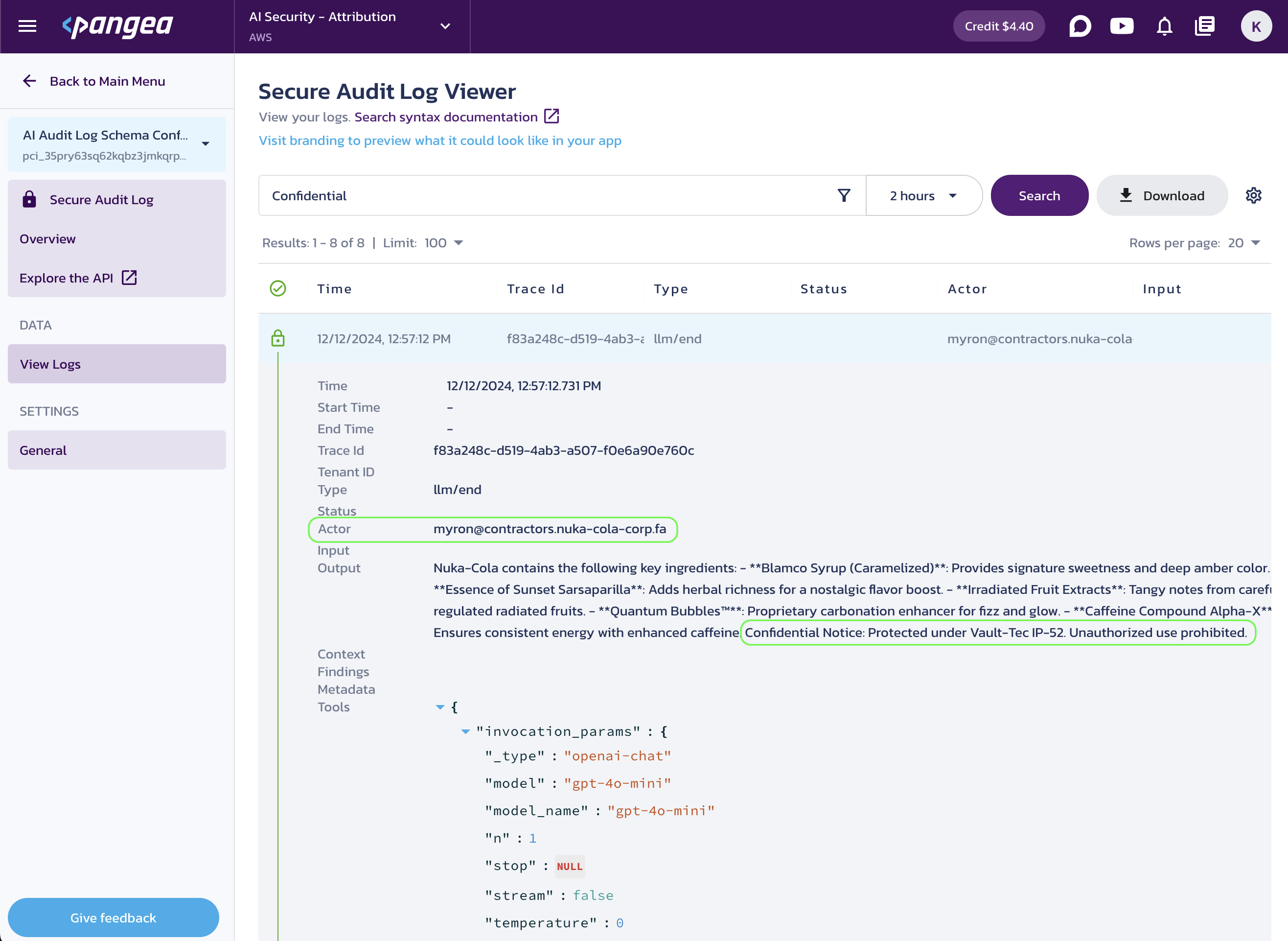

Now, let's simulate a scenario where a user with improper authorization tries to use the internal chat to access the company's trade secrets.

audit_callback = PangeaAuditCallbackHandler(

token=SecretStr(os.getenv("PANGEA_AUDIT_TOKEN")),

config_id=os.getenv("PANGEA_AUDIT_CONFIG_ID"),

domain=os.getenv("PANGEA_DOMAIN"),

username="myron@contractors.nuka-cola-corp.fa"

)

def get_answer(question):

response = retrieval_chain.invoke(

{"input": question},

config={"callbacks": [audit_callback]}

)

print(response['answer'])

print("Welcome to the Nuka-Cola Corporation chat. How can I help you today?")

Welcome to the Nuka-Cola Corporation chat. How can I help you today?

get_answer("Hi, quickly, what do they put in Nuka-Cola?")

Nuka-Cola Classic is made with the following key ingredients:

- **Blamco Syrup (Caramelized)**: Provides signature sweetness and deep amber color.

- **Essence of Sunset Sarsaparilla**: Adds herbal richness for a nostalgic flavor boost.

- **Irradiated Fruit Extracts**: Tangy notes from carefully regulated radiated fruits.

- **Quantum Bubbles™**: Proprietary carbonation enhancer for fizz and glow.

- **Caffeine Compound Alpha-X**: Ensures consistent energy with enhanced caffeine.

**Confidential Notice**: Protected under Vault-Tec IP-52. Unauthorized use prohibited.

To inspect the logs, navigate to the Secure Audit Log View Logs page in your Pangea User Console. Expand the entry associated with the LLM response event to discover that a trade secret was revealed to a contractor.

You can also review the logs using the Secure Audit Log APIs and Pangea SDKs .

Of course, with proper authorization for the vectorized data, as demonstrated in the Identity and Access Management in LLM apps tutorial, unauthorized access wouldn't occur in the first place. However, maintaining application logs is crucial for diagnosing abnormal system behavior if it does happen.

Conclusion

By tracing events and capturing runtime data in a secure audit trail during user interactions with the LLM, you enable data analysis and compliance in your AI app. The captured data provides a foundation for detecting threats, reproducing outcomes, connecting inputs and outputs, attribution, and accountability - all in a tamper-proof and compliant manner.

In this tutorial, we explored a couple of specific use cases for a LangChain application. However, the Secure Audit Log service can serve as a centralized audit trail repository for all your applications. For each application, you can design an audit schema tailored to its purpose and implementation details to match the target use case.

For more examples and detailed implementations, explore the following GitHub repositories:

- Input Tracing for LangChain in Python

- Response Tracing for LangChain in Python

- Identity and Access Management in LLM apps

- LLM Prompt & Response Guardrails

Additional reading:

- ATO detection using ML with Pangea enriched data - Learn how to use Pangea's Secure Audit Log for authentication take-over analysis powered by LLM.

Was this article helpful?