API Gateways Overview

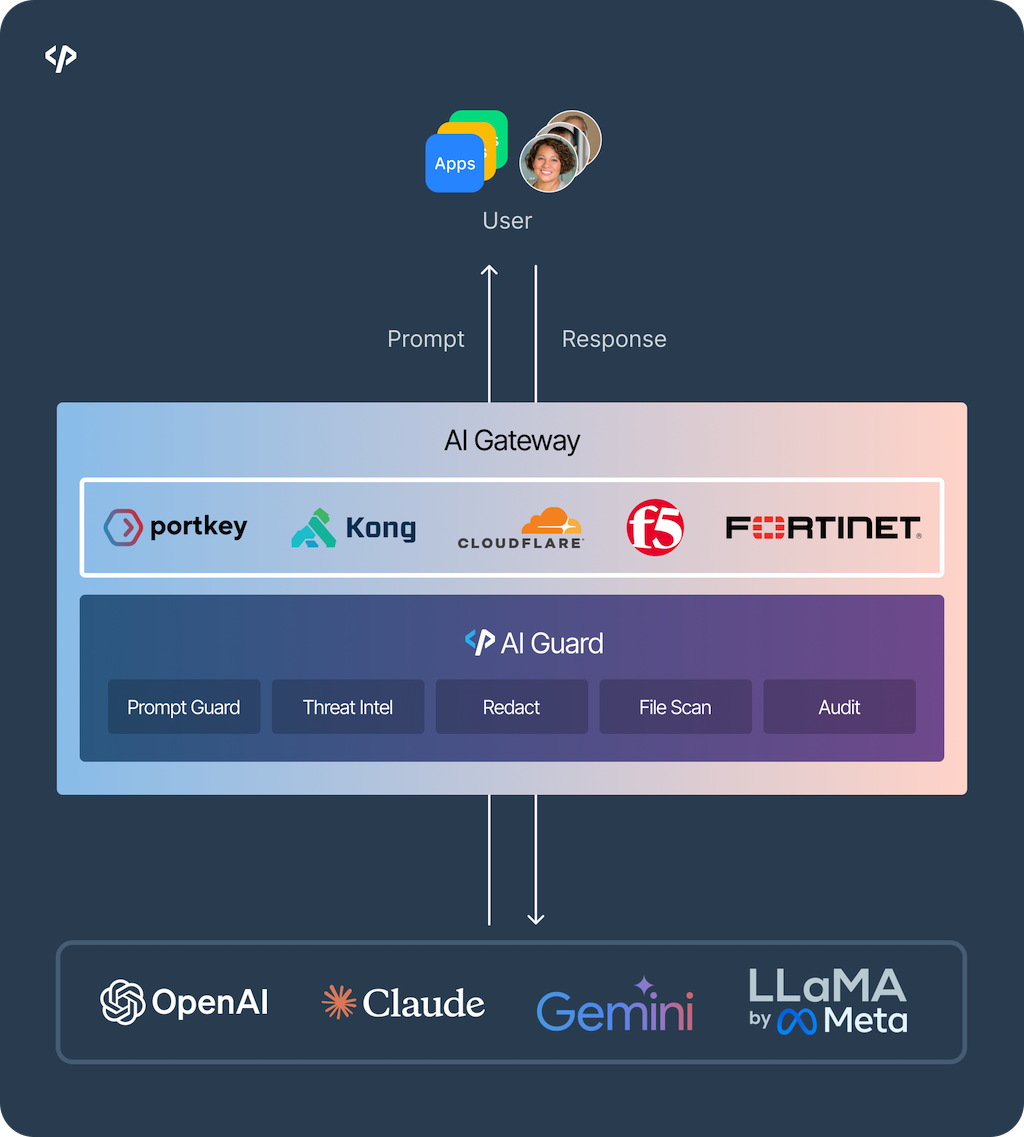

Pangea services - like

AI Guard , Redact , and others - address today’s security and data protection challenges, particularly for AI-powered and data-sensitive applications.To help organizations deploy these services flexibly, at scale, and securely, Pangea integrates with AI and API gateways such as

Portkey , Kong , and LiteLLM . These platforms act as secure, high-performance entry points for API traffic, managing dynamic routing, request filtering, load balancing, policy enforcement, and real-time monitoring.By integrating with these platforms, Pangea enables organizations to create AI and API Security API Gateways that protect application data with minimal or no changes to application code.

Why API Gateways matter

Network AI platforms enable scalable, secure access to Pangea services, acting as security enforcers and traffic managers. These platforms integrate directly with Pangea’s API services through specialized plugins, enhancing security, routing, and API governance.

For example, Kong and Portkey offer real-time routing and service discovery, allowing customers to dynamically manage API traffic and apply security policies

By adding Pangea security to these gateways, you introduce an additional layer of protection - ensuring compliant and secure data flows to downstream applications.

Deployment models

You can learn more about where Pangea services can run in the Deployment Models documentation.

Pangea SaaS integration

In a SaaS deployment, Pangea manages all infrastructure and scaling - allowing you to focus entirely on service usage. This model is ideal for teams seeking rapid deployment with minimal operational complexity.

You can consume Pangea SaaS services from your API gateway solution or your application code, no matter where they are deployed.

Edge integration

Organizations with stricter data control or compliance needs can deploy Pangea services in an Edge environment. This model processes sensitive data within customer-managed infrastructure, while Pangea’s control plane handles service updates and configurations.

For example, AI Guard can be deployed at the edge, with Portkey handling request routing and applying guardrails to ensure traffic flows securely and efficiently. This approach reduces latency by processing data closer to its source - while allowing Pangea to manage request filtering and threat detection.

Similarly, services like Redact can operate within your compliance boundaries by leveraging network platforms to maintain secure API communication without complex infrastructure changes - keeping your private data in your hands alone.

Your gateways and applications running in the same infrastructure can easily consume Pangea services deployed on Edge.

Private Cloud integration

In a Private Cloud deployment, organizations retain full control over their Pangea services by running both the control plane and data processing components within their infrastructure. This model is ideal for teams that need to meet strict compliance requirements and have the technical expertise to independently manage and scale their services.

With Pangea’s Private Cloud deployment, services like AI Guard can be deployed directly within your environment, with network platforms ensuring secure and efficient routing of traffic. This integration allows you to maintain full data sovereignty while leveraging Pangea’s security features, such as prompt injection detection and unauthorized access prevention.

By combining network AI platforms with Pangea’s Private Cloud, you can seamlessly protect AI models and data processing, providing enhanced security without compromising performance.

How API Gateways support Pangea services

These platforms allow customers to incorporate Pangea’s capabilities into their existing infrastructure via plugins, extensions, and configuration changes.

For example, Kong and Portkey allow customers to configure custom plugins that efficiently route API traffic while securing it with Pangea services like AI Guard.

Summary and next steps

Pangea’s partnerships with network AI platforms simplify the deployment and scalability of secure services. By combining modern traffic management with Pangea's security services, organizations can confidently deploy across Cloud and Edge environments, ensuring both performance and compliance.

To continue integrating Pangea into your workflow, follow one of these guides based on your network AI deployment:

Was this article helpful?